Dataflows Gen 2 vs Gen 1

Microsoft Fabric has brought together and simplified all the components required for a complete analytics platform into one unified SaaS product. It provides multiple options for extracting, transforming, and loading (ETL) your data into its OneLake foundation, based on your specific needs and preferences. You can build Data Factory Pipelines to copy data and/or orchestrate other components, write Python in a notebook, or use Power Query in a Dataflow Gen 2, for example.

In this entry, we dive into Dataflows Gen 2. We will help you understand the differences from the previous generation of Dataflows, how to use the new generation, and the options for migration of the previous dataflows to Gen 2.

What is Dataflows Gen 2?

Dataflows Gen2 is the new generation of dataflows included in Microsoft Fabric. They allow us to ingest and transform data using Power Query, just like in Gen 1. However, they add to the previous generation in several ways. Two of the major additions that we will discuss are (1) allowing the user to deliver the data to additional destinations, and (2) providing a simple option to append new data rather than being restricted to only overwriting or configuring incremental refresh.

Gen 1 vs Gen 2 Differences

A question that always comes to mind with a new version of any service or application is what the impacts are to existing processes; and the differences from previous versions.

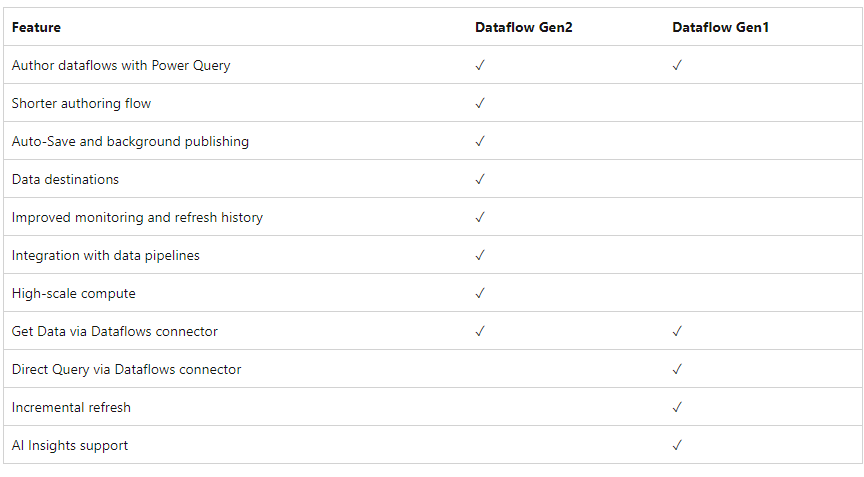

Dataflows Gen 2 has much to add when compared to Gen 1, so let’s check the main differences:

Dataflows Gen 2 – Feature Overview

There are only two points of contact in the above chart between the two generations of Dataflows: the Power Query authoring experience and the connectivity options which allow the user to access more than 140 data sources or custom connectors.

Additionally, Dataflows Gen 2 has an in-built auto-save experience that allows us to continue the work from where we left off as it auto-saves into the cloud and no longer requires us to confirm the save at exit. Along with this feature, it is also available for background publishing that runs several validations.

Data Destinations

The improvement in the choice of destination of the data is one major difference between both generations. While on Gen 1, there is only internal/staging storage to be consumed in datasets (or a bring-your-own-lake scenario), Gen 2 allows us the option to select the destination for the transformed data, allowing us to save the data in:

- Fabric Lakehouse

- Azure Data Explorer (Kusto)

- Azure Synapse Analytics (SQL DW)

- Azure SQL Database

With this new option, along with the use of pipelines, we can now automate the consumption of the output of dataflows, allowing us to perform tasks that can go from running a SQL query to running a Python workbook.

Append or Overwrite

With the new store locations, there is no need to use incremental refresh since the data is now stored in our different data sources to be consumed later. The new capabilities for storage are complemented by a new experience for refresh history and monitoring that allows us to have full-detailed information on our data refresh.

Performance

The fabric integration with the use of Lakehouse and Warehouse permits the achievement of an enhanced compute engine that improves the performance of the ETL process.

Licensing

Licensing for Dataflow Gen 2 only works with a Fabric capacity, while the previous version is available starting with the Pro License and above. However, for the tenants already in premium, Microsoft permits Fabric capacities without any need to update licensing, which means that Dataflow Gen 2 is currently available for all Power BI premium capacities.

When to use Dataflow Gen2 or why to use it

One of the questions that comes to mind with these two generations available is which one is the right fit for our requirements. Despite all the differences and improvements in the new generation, the answer lies in the licensing.

Dataflow Gen 1 works with Power BI Pro, PPU and premium licenses, while Dataflow Gen 2 only works in a Fabric capacity. If you have Fabric licensing, having the latest version will significantly improve your performance and optimize the way you store and consume data.

Another advantage of the new generation is the destination options, which allow us, using the SQL Endpoint, to create and/or edit a Power BI dataset that consumes the data and generates a report. This means that we can now have a start-to-end solution for the Fabric environment, from consuming data to doing the ETL and creating reports that can be shared across the organization.

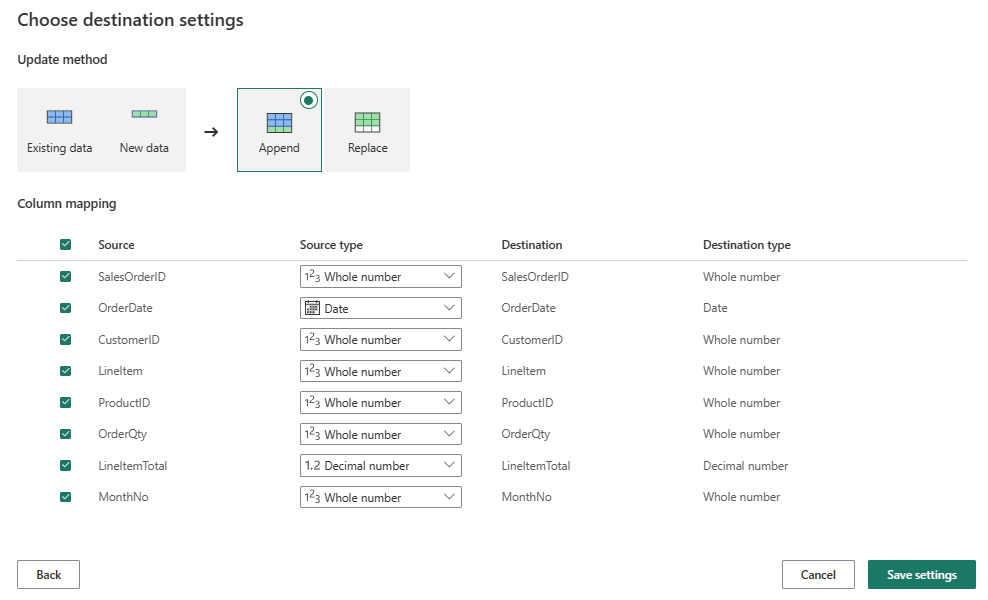

Storage and refresh setup on Dataflow Gen2

One of the improvements on Dataflows is the storage and refresh setup. The new generation can help us determine the destination, whether it’s a Lakehouse or an Azure SQL database. This allows us to refresh the data by overwriting the previous data or appending it to our table.

Dataflows Gen 2 – Destination Settings.

This setup is simple and done within the workflow of the dataflow, and this changes the way the information is stored. This changes not only the refresh of the data but, depending on the selected option, can reduce the refresh time.

With the usage of pipelines, the refresh is simplified and can be called at any time. In the previous versions, we had the use of scheduled refresh, which, based on your license, would be run a limited number of times. The new workflow allows a faster and timelier refresh and update of your data, adjusted to your business needs.

How to migrate from Gen 1 to Gen 2

Being based on the Premium licensing, most of the users already have several Dataflows Gen 1 implemented, and the upgrade to the new generation is simplified by the new concept of templates.

Templates for dataflows are a package for the entire Power Query configuration. This includes all the definitions that are readable by all the Power Query environments, including Dataflow Gen 2, Dataflow Gen 1, and Excel. This can help us with a smooth and easy transition from our previous dataflows and include the information from our previous files in our dataflow information.

The credentials and connections aren’t stored in the template file and need to be setup during the import step, adding to the security of the setup data.

Currently there is no out-of-the-box solution for a bulk migration from Dataflow Gen1 to Gen2. If you need help in getting a bulk migration of your Dataflow to the new Gen, reach out so that we can help you achieve this.

Conclusion

With the powerful advantages of data destinations, appending options, and performance improvements, most organizations should consider migrating any existing Dataflows Gen 1 to Gen 2. The flexible Fabric licensing options provide several different configurations to allow you to enjoy these advantages. Reach out to us if you need help understanding licensing or to create a large-scale migration plan.

Please contact us for a free data strategy briefing to learn how Fabric can help you accelerate, de-risk, and streamline your data and analytics strategy.